1.0. What's covered in the blog?

1) Apache documentation on sub-workflows

2) A sample program that includes components of a oozie workflow application with a java main action and a subworkflow containing a sqoop action. Scripts/code, sample dataset and commands are included; Oozie actions covered: java action, sqoop action (mysql database);

Versions:

Oozie 3.3.0, Sqoop (1.4.2) with Mysql (5.1.69)Related blogs:

Blog 1: Oozie workflow - hdfs and email actions

Blog 2: Oozie workflow - hdfs, email and hive actions

Blog 3: Oozie workflow - sqoop action (Hive-mysql; sqoop export)

Blog 4: Oozie workflow - java map-reduce (new API) action

Blog 5: Oozie workflow - streaming map-reduce (python) action

Blog 6: Oozie workflow - java main action

Blog 7: Oozie workflow - Pig action

Blog 8: Oozie sub-workflow

Blog 9a: Oozie coordinator job - time-triggered sub-workflow, fork-join control and decision control

Blog 9b: Oozie coordinator jobs - file triggered

Blog 9c: Oozie coordinator jobs - dataset availability triggered

Blog 10: Oozie bundle jobs

Blog 11a: Oozie Java API for interfacing with oozie workflows

Blog 12: Oozie workflow - shell action +passing output from one action to another

2.0. Apache documentation on sub-workflows

The sub-workflow action runs a child workflow job, the child workflow job can be in the same Oozie system or in another Oozie system. The parent workflow job will wait until the child workflow job has completed.

Syntax:

The child workflow job runs in the same Oozie system instance where the parent workflow job is running.

The app-path element specifies the path to the workflow application of the child workflow job.

The propagate-configuration flag, if present, indicates that the workflow job configuration should be propagated to the child workflow.

The configuration section can be used to specify the job properties that are required to run the child workflow job. The configuration of the sub-workflow action can be parameterized (templatized) using EL expressions.

Link to Apache documentation:

http://oozie.apache.org/docs/3.3.0/WorkflowFunctionalSpec.html#a3.2.6_Sub-workflow_Action

Note:

For a typical on-demand workflow, you have core components - job.properties and workflow.xml. For a sub workflow, you need yet another workflow.xml that clearly defines activities to occur in the sub-workflow. In the parent workflow, the sub-workflow is referenced. To keep it neat, best to have a sub-directory to hold the sub-workflow core components. Also, a single job.properties is sufficient.

3.0. Sample workflow application

The workflow has two actions - one is a java main action and the other is a sub-workflow action.

The java main action parses log files on hdfs and generates a report.

The sub-workflow action executes after success of the java main action, and pipes the report in hdfs to mysql database.

Pictorial overview:

Components of such a workflow application:

Application details:

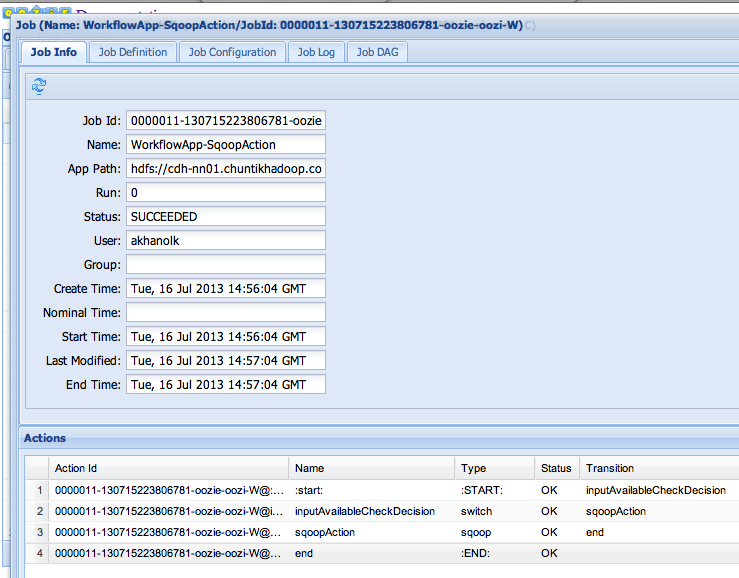

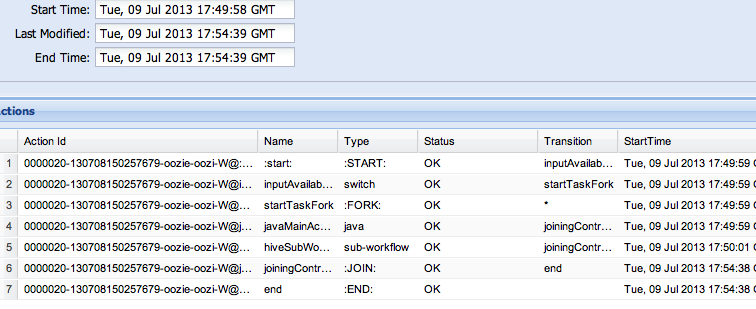

Oozie web console - screenshots: