1. Documentation on the Oozie pig action

2. A sample workflow that includes oozie pig action to process some syslog generated log files. Instructions on loading sample data and running the workflow are provided, along with some notes based on my learnings.

Versions covered:

Oozie 3.3.0; Pig 0.10.0

Related blogs:

Blog 1: Oozie workflow - hdfs and email actions

Blog 2: Oozie workflow - hdfs, email and hive actions

Blog 3: Oozie workflow - sqoop action (Hive-mysql; sqoop export)

Blog 4: Oozie workflow - java map-reduce (new API) action

Blog 5: Oozie workflow - streaming map-reduce (python) action

Blog 6: Oozie workflow - java main action

Blog 7: Oozie workflow - Pig action

Blog 8: Oozie sub-workflow

Blog 9a: Oozie coordinator job - time-triggered sub-workflow, fork-join control and decision control

Blog 9b: Oozie coordinator jobs - file triggered

Blog 9c: Oozie coordinator jobs - dataset availability triggered

Blog 10: Oozie bundle jobs

Blog 11a: Oozie Java API for interfacing with oozie workflows

Blog 11b: Oozie Web Service API for interfacing with oozie workflows

Your thoughts/updates:

If you want to share your thoughts/updates, email me at airawat.blog@gmail.com.

2.0. About the Oozie Pig Action

Excerpt from Apache Oozie documentation-

The pig action starts a Pig job.

The workflow job will wait until the pig job completes before continuing to the next action.

The pig action has to be configured with the job-tracker, name-node, pig script and the necessary parameters and configuration to run the Pig job.

A pig action can be configured to perform HDFS files/directories cleanup before starting the Pig job. This capability enables Oozie to retry a Pig job in the situation of a transient failure (Pig creates temporary directories for intermediate data, thus a retry without cleanup would fail).

Hadoop JobConf properties can be specified in a JobConf XML file bundled with the workflow application or they can be indicated inline in the pig action configuration.

The configuration properties are loaded in the following order, job-xml and configuration , and later values override earlier values.

Inline property values can be parameterized (templatized) using EL expressions.

The Hadoop mapred.job.tracker and fs.default.name properties must not be present in the job-xml and inline configuration.

As with Hadoop map-reduce jobs, it is possible to add files and archives to be available to the Pig job.

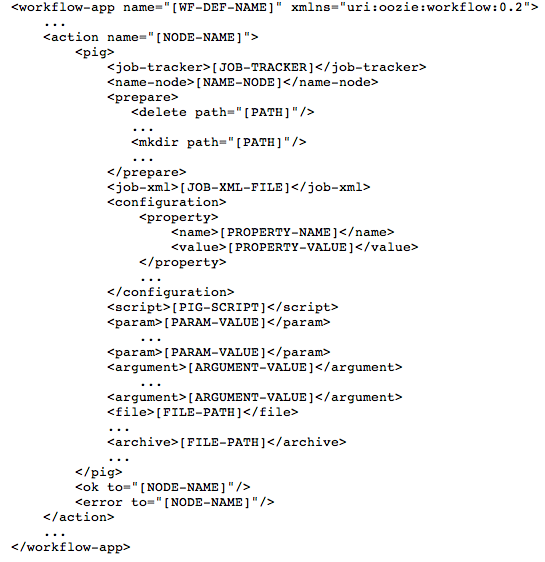

Syntax for Pig actions in Oozie schema 0.2:

The prepare element, if present, indicates a list of path do delete before starting the job. This should be used exclusively for directory cleanup for the job to be executed.

The job-xml element, if present, must refer to a Hadoop JobConf job.xml file bundled in the workflow application. The job-xml element is optional and as of schema 0.4, multiple job-xml elements are allowed in order to specify multiple Hadoop JobConf job.xml files.

The configuration element, if present, contains JobConf properties for the underlying Hadoop jobs.

Properties specified in the configuration element override properties specified in the file specified in the job-xml element.

External Stats can be turned on/off by specifying the property oozie.action.external.stats.write as true or false in the configuration element of workflow.xml. The default value for this property is false .

The inline and job-xml configuration properties are passed to the Hadoop jobs submitted by Pig runtime.

The script element contains the pig script to execute. The pig script can be templatized with variables of the form ${VARIABLE} . The values of these variables can then be specified using the params element.

NOTE: Oozie will perform the parameter substitution before firing the pig job. This is different from the parameter substitution mechanism provided by Pig , which has a few limitations.

The params element, if present, contains parameters to be passed to the pig script.

Apache oozie pig action documentation:

http://archive.cloudera.com/cdh4/cdh/4/oozie/WorkflowFunctionalSpec.html#a3.2.3_Pig_Action

3.0. Sample workflow application

Highlights:For this exercise, I have loaded some syslog generated logs to hdfs and am running a pig script to parse the logs and generate a report- through Oozie.

Components of workflow:

Pictorial overview of workflow:

Oozie web console - screenshots:

Hi Anagha,

ReplyDeleteMy workflow xml is :

______________________________________

${jobTracker}

${nameNode}

"Killed job due to error: ${wf:errorMessage(wf:lastErrorNode())}"

______________________________________

Job.properties is :

______________________________________

nameNode=maprfs:///

jobTracker=maprfs:///

queueName=default

oozie.libpath=${nameNode}opt/mapr/oozie/oozie-4.0.0/lib

oozie.use.system.libpath=true

oozie.wf.rerun.failnodes=true

oozieProjectRoot=${nameNode}user/apanda/oozieProject

appPath=${oozieProjectRoot}/workflowPigAction

oozie.wf.application.path=${appPath}

outputDir=${appPath}/output

______________________________________

The oozie web console throws the below error :

2014-02-27 17:45:13,023 WARN PigActionExecutor:542 - USER[apanda] GROUP[-] TOKEN[] APP[WorkflowWithPigAction] JOB[0000002-140227171712374-oozie-mapr-W] ACTION[0000002-140227171712374-oozie-mapr-W@pigAction] Launcher ERROR, reason: Main class [org.apache.oozie.action.hadoop.PigMain], main() threw exception, org/apache/pig/Main

2014-02-27 17:45:13,024 WARN PigActionExecutor:542 - USER[apanda] GROUP[-] TOKEN[] APP[WorkflowWithPigAction] JOB[0000002-140227171712374-oozie-mapr-W] ACTION[0000002-140227171712374-oozie-mapr-W@pigAction] Launcher exception: org/apache/pig/Main

java.lang.NoClassDefFoundError: org/apache/pig/Main

at org.apache.oozie.action.hadoop.PigMain.runPigJob(PigMain.java:324)

at org.apache.oozie.action.hadoop.PigMain.run(PigMain.java:219)

at org.apache.oozie.action.hadoop.LauncherMain.run(LauncherMain.java:37)

2014-02-27 17:45:13,322 INFO ActionEndXCommand:539 - USER[apanda] GROUP[-] TOKEN[] APP[WorkflowWithPigAction] JOB[0000002-140227171712374-oozie-mapr-W] ACTION[0000002-140227171712374-oozie-mapr-W@pigAction] ERROR is considered as FAILED for SLA

2014-02-27 17:45:16,578 INFO ActionStartXCommand:539 - USER[apanda] GROUP[-] TOKEN[] APP[WorkflowWithPigAction]

2014-02-27 17:45:16,953 WARN CoordActionUpdateXCommand:542 - USER[apanda] GROUP[-] TOKEN[] APP[WorkflowWithPigAction] JOB[0000002-140227171712374-oozie-mapr-W] ACTION[-] E1100: Command precondition does not hold before execution, [, coord action is null], Error Code: E1100

My mapper and reducer job are Suceeded but the Oozie workflow gets killed every time after that. Do I need to do any changes to my workflow.xml.

Kindly suggest, I found your blog quite helpful and have referred to it.

Regards,

-Amit

Sorry the workflow.xml did not get pasted correctly

ReplyDeleteYour Sample Codes works well! Thanks to Your good job.

ReplyDeleteHi,

ReplyDeleteI got this error while schedule the sample job. java.lang.ClassNotFoundException: Class org.apache.oozie.action.hadoop.PigMain not found.

am using cloudera 5.0.0 enterprise.

please let me know, if you know how to fix this.

Hi, Narasimman, Please check the Pig jar's and place them in the lob folder in the root folder while executing the Pig job.

ReplyDeleteTypo.. Lib folder

ReplyDeletethakyou it vry nice blog for beginners

ReplyDeletehttps://www.emexotechnologies.com/courses/big-data-analytics-training/big-data-hadoop-training/

Good Post! Thank you so much for sharing this pretty post, it was so good to read and useful to improve my knowledge as updated one, keep blogging.

ReplyDeletehttps://www.emexotechnologies.com/online-courses/big-data-hadoop-training-in-electronic-city/

Thank you.Well it was nice post and very helpful information on Big Data Hadoop Online Training Hyderabad

ReplyDeleteThis is a nice blog.

ReplyDeleteBig Data and Hadoop Online Training