1.0. What's in this blog?

1. Introduction to sequence file format

2. Sample code to create a sequence file (compressed and uncompressed), from a text file, in a map reduce program, and to read a sequence file.

2.0. What's a Sequence File?

2.0.1. About sequence files:

A sequence file is a persistent data structure for binary key-value pairs.

2.0.2. Construct:

Sequence files have sync points included after every few records, that align with record boundaries, aiding the reader to sync. The sync points support splitting of files for mapreduce operations. Sequence files support record-level and block-level compression.

Apache documentation: http://hadoop.apache.org/docs/current/api/org/apache/hadoop/io/SequenceFile.html

Excerpts from Hadoop the definitive guide...

The uncompressed, record-compressed and block-compressed sequence files, share the same header. Details are below, from the Apache documentation, on sequence files.

2.0.2. Construct:

Sequence files have sync points included after every few records, that align with record boundaries, aiding the reader to sync. The sync points support splitting of files for mapreduce operations. Sequence files support record-level and block-level compression.

Apache documentation: http://hadoop.apache.org/docs/current/api/org/apache/hadoop/io/SequenceFile.html

Excerpts from Hadoop the definitive guide...

"A sequence file consists of a header followed by one or more records. The first three bytes of a sequence file are the bytes SEQ, which acts as a magic number, followed by a single byte representing the version number. The header contains other fields, including the names of the key and value classes, compression details, user-defined metadata, and the sync marker.

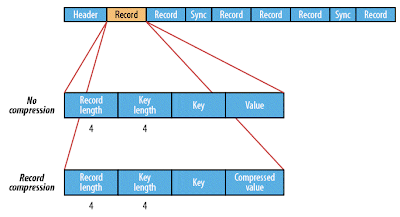

Structure of sequence file with and without record compression-

The format for record compression is almost identical to no compression, except the value bytes are compressed using the codec defined in the header. Note that keys are not compressed.

Structure of sequence file with and without block compression-

Block compression compresses multiple records at once; it is therefore more compact than and should generally be preferred over record compression because it has the opportunity to take advantage of similarities between records. A sync marker is written before the start of every block. The format of a block is a field indicating the number of records in the block, followed by four compressed fields: the key lengths, the keys, the value lengths, and the values."

The uncompressed, record-compressed and block-compressed sequence files, share the same header. Details are below, from the Apache documentation, on sequence files.

SequenceFile Header

- version - 3 bytes of magic header SEQ, followed by 1 byte of actual version number (e.g. SEQ4 or SEQ6)

- keyClassName -key class

- valueClassName - value class

- compression - A boolean which specifies if compression is turned on for keys/values in this file.

- blockCompression - A boolean which specifies if block-compression is turned on for keys/values in this file.

- compression codec - CompressionCodec class which is used for compression of keys and/or values (if compression is enabled).

- metadata - SequenceFile.Metadata for this file.

- sync - A sync marker to denote end of the header.

Uncompressed SequenceFile Format

- Header

- Record

- Record length

- Key length

- Key

- Value

- A sync-marker every few 100 bytes or so.

Record-Compressed SequenceFile Format

- Header

- Record

- Record length

- Key length

- Key

- Compressed Value

- A sync-marker every few 100 bytes or so.

Block-Compressed SequenceFile Format

2.0.3. Datatypes:

- Header

- Record Block

- Uncompressed number of records in the block

- Compressed key-lengths block-size

- Compressed key-lengths block

- Compressed keys block-size

- Compressed keys block

- Compressed value-lengths block-size

- Compressed value-lengths block

- Compressed values block-size

- Compressed values block

- A sync-marker every block.

The keys and values need not be instances of Writable, just need to support serialization.

2.0.4. Creating sequence files:

Uncompressed: Create an instance of SequenceFile.Writer and call append(), to add key-values, in order. For record and block compressed, refer the Apache documentation. When creating compressed files, the actual compression algorithm used to compress key and/or values can be specified by using the appropriate CompressionCodec.

2.0.5. Reading data in sequence files:

Create an instance of SequenceFile.Reader, and iterate through the entries using reader.next(key,value).

2.0.6. Usage

- Data storage for key-value type data

- Container for other files

- Efficient from storage perspective (binary), efficient from a mapreduce processing perspective (supports compression, and splitting)

- Container for other files

- Efficient from storage perspective (binary), efficient from a mapreduce processing perspective (supports compression, and splitting)

3.0. Creating a sequence file

4.0. Reading a sequence file

Covered already in the gist under section 3.

5.0. Any thoughts/comments

Any constructive criticism and/or additions/insights is much appreciated.

Cheers!!

I want to thank you for the shared code. It helped with my thesis, so I really appreciate it.

ReplyDeleteGreetings from chile :)

This comment has been removed by the author.

ReplyDeletewith out implementing sequencefile.write instance how could it work ?

ReplyDeletegreat one. Really helps in understanding sequence file

ReplyDeleteThis comment has been removed by the author.

ReplyDeleteHello. I followed your tutorial and adapted it to hadoop 2.7.1. When i used the SequenceFileInputFormat everithing is ok, but when i tried to read it i got EOFException. I don't know exacly where is the error. I think in 3 possibilities:

ReplyDelete1- I make some mistake in SequenceFileInputFormat;

2- I make some mistake in SequenceFileOutputFormat;

3- The custom writable type have a incorrect implementation in public void readFields(DataInput in) method, because the error happen on this method.

I looking in web for some reason for it but i don't founded any satisfactory answer. May you help me?

thank tou for offering such a nice content very very unique blog .one of the recommanded blog for students and professionals

ReplyDeleteData science training in hyderabad

Data science training in ameerpet

Nice to see your concept about Hadoop. And good information you have gained and posted here . Ihad also some good inforamation about Hadoop for more topics please visit my sites.

ReplyDeleteHadoop Training In Hyderabad

hi Thanks for helping me to understand Sequence concepts. As a beginner your post help me a lot.

ReplyDeleteHadoop Training in Velachery | Hadoop Training .

nice blog, I like your good post, thanks for sharing great information.

ReplyDeleteHadoop Training in Noida

Excellent article. Very interesting to read. I really love to read such a nice article. Thanks! keep rocking. Big data hadoop online Training Bangalore

ReplyDeleteReally great blog. I loved your article and excepting more like this one. Thanks for sharing with us...

ReplyDeletehadoop big data classes in pune

big data training institutes in pune

big data institute in pune

big data institutes in pune

big data testing classes

big data testing

thakyou it vry nice blog for beginners

ReplyDeletehttps://www.emexotechnologies.com/courses/big-data-analytics-training/big-data-hadoop-training/

Good Post! Thank you so much for sharing this pretty post, it was so good to read and useful to improve my knowledge as updated one, keep blogging.

ReplyDeletehttps://www.emexotechnologies.com/online-courses/big-data-hadoop-training-in-electronic-city/

nice

ReplyDelete<a href="https://www.vcubesoftsolutions.com/testing-tools-institutes-in-kphb-hyderabad/best testing tools institute in kphb</a>